Toward a gaze-independent BCI using the cVEP protocol

A Brain-Computer Interface (BCI) is a technology that enables direct communication between the brain and an external device, affording a bypass of traditional neuromuscular pathways. It works by detecting and interpreting brain signals, which are then translated into commands for controlling computers, prosthetics, or other electronic devices.

Gaze-independent BCIs are particularly relevant for individuals who are fully paralyzed and unable to move their eyes, as they provide a means of interaction that does not rely on eye movement or muscle control. By using brain signals alone, these systems can enable communication and environmental control, significantly improving the quality of life for people with locked-in syndrome or alike severe neuromuscular conditions.

Abstract:

A brain–computer interface (BCI) speller typically relies on gaze fixation, which excludes users with impaired oculomotor control, like people living with late-stage amyotrophic lateral sclerosis. We present the first step toward a gaze-independent BCI using the code- modulated visual evoked potential (c-VEP). In our study, participants fixated centrally while covertly attending to one of two bilaterally presented, pseudo-randomly flashing stimuli. From the recorded

electroencephalography, we independently analyzed three neural signatures of covert visuospatial attention: (1) the c-VEP, (2) occipital alpha-band lateralization, and (3) the ERP. From these neural signals, we achieved a grand average classification accuracy of 67 % (c-VEP), 88 % (alpha), and 98 % (ERP). This demonstrates the viability of covert spatial attention as a control signal for gaze-independent c-VEP BCIs.

Virtual Touch:

Tagging mid-air Haptics

Back in 2023, I did an internship at imec-mict-UGent, essentially a joint research hub in multimedia and operator systems between industry and academia. Initially I intended to pursue a project investigating detrimental effects of cycling between augmented reality-supplemented work and regular work. This idea turned out to be too broad for the 6 months duration of my stay. As a new project, I was asked to do an EEG event-related-potentials (ERP) analysis for a Master’s thesis, as the person was unable to do so for obscure reasons. I gladly accepted, as it gave me the opportunity to work with real EEG data. I learned about signal processing, electrophysiology, sensor space & source space, how it all doesn’t make sense but somehow still works.

The ERP project was close to being finished when my supervisor, Klaas, approached me with a new project concerning neural markers of an innovative mode of tactile sensation: ultrasound-based haptics. I gladly accepted, finished the ERP analysis, and started ideating. Fortunately, a doctoral school was happening at UGent, and since I knew people there, I was invited to join the lectures. On that day, I learned about frequency tagging—a method of setting neural markers through the periodic presentation of a stimulus. The coolest part is that it is modulated by attention, meaning the attention directed to the stimulus can be read out from M/EEG recordings.

As soon as I had the method and means, I started running the first pre-pilots. During this process, I had access to the EEG system, so I plugged myself in and observed my neural oscillations in real-time. It was quite a fascinating experience, similar to the time I spent hours looking through my MRI scan.

In the remaining months I managed to get 5 subjects in with a 3.5-times revised experimental protocol. I did some preliminary analysis still during that time, but the main analysis and write-down happened in July 2024.

Abstract:

Touchless interfaces represent a promising advancement in human-machine interaction, with midair haptic interfaces gaining significant attention. These interfaces use focal ultrasound (US) beams projected onto the skin to create vibrational sensations, enabling dynamic haptic feedback through hand tracking. This technology has broad applications across industries such as automotive, extended reality, medical training, and immersive marketing.

To the best of my knowledge, this EEG study is first to extend frequency-tagged neural markers to the domain of ultrasound-based haptics. Specifically, a low-frequency haptic pulse was employed to drive steady-state somatosensory evoked potentials (SSSEPs) in subjects EEG, which was then analyzed by spectral analysis and multivariate generalized eigendecomposition (GED).

Results indicate substantial difficulties in entraining the frequency tag, as no distinct peak in the driving frequency was found in sensor-space. In contrary, power spectral density (PSD) analysis shows a suppression in the driving frequency both in absolute values and partly also relative to ipsilateral sites for some cases. GED analysis however, points towards an entrainment of the driving frequency in accordance with the somatosensory template. Additionally, big variance between and within participants was observed in terms of PSD estimations and GED components, which are discussed in the limitations section.

Despite the inconclusive results, this pilot study provides valuable insights for informing a refined experimental protocol for large-scale execution on establishing reliable neural markers for ultrasound-based haptics. This conceptual foundation can be used to generate comparability with traditional haptics on a neural level, making way for investigations of cognitive processes such as attentive biases in operator systems.

Conditioning the Speed-Accuracy-Tradeoff:

Computational modeling of decision making

My Bachelors Thesis. This work stems from my internship at Ghent University and is meant to serve as an introduction to the idea of computational modeling and cognitive control. It is written to be accessible for everyone and therefore begins with two narrative section to set the stage for the scientific part of the article.

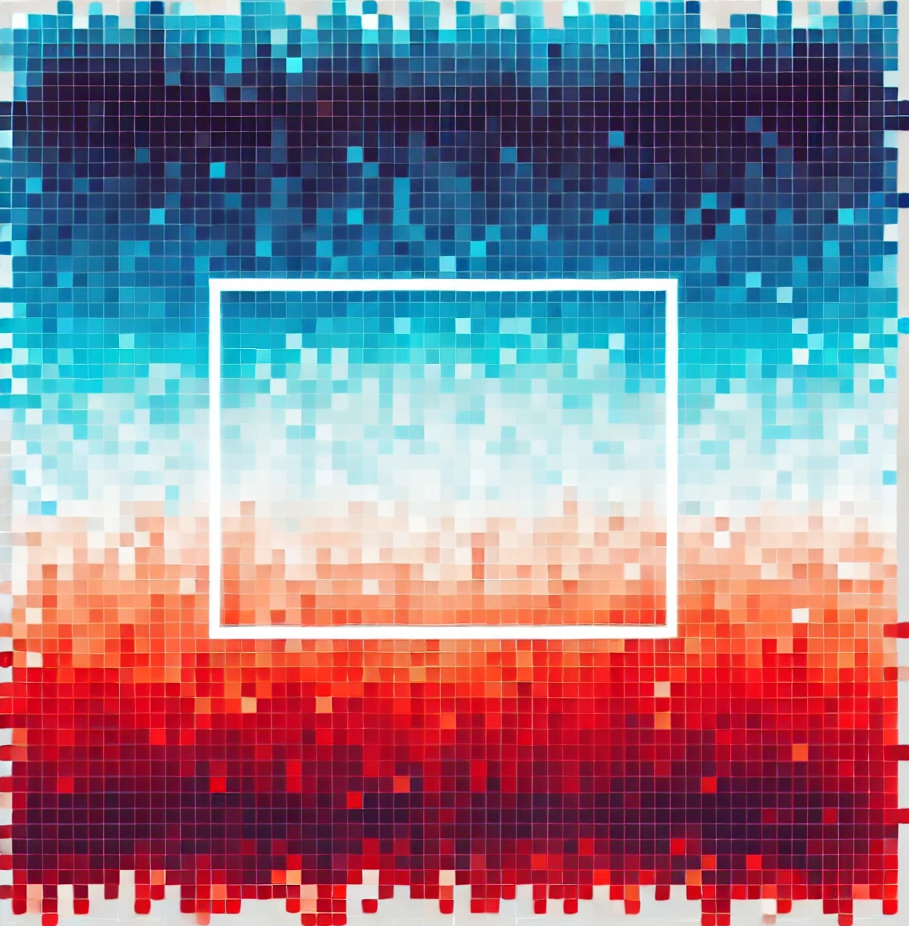

Lastly, I’ve employed simulatory methods to let an agent take decisions in a number of different configurations. The output of such runs are ‘incentive maps’ (working title), similar to the image displayed on the right. This yielded some interesting insights on how to approach the problem from different perspectives.

Abstract:

Recent advances in cognitive neuroscience have brought forth a novel perspective on the control problem, grounding it in associative learning – a mechanism largely seen as the dichotomous counterpart of cognitive control. An important implication following this theory is that higher order functions are subject to the same reinforcement learning principles as lower-level behavior. Following this notion, the prediction can be made that humans adjust their control parameters based on learned association with contextual cues.

The presents study was designed to explore this prediction by employing a fast-paced visual discrimination task featuring two contexts, wherein participants were nudged to assume high, and low caution respectively in their decision making, which was quantified by the Drift-Diffusion Model (DDM). Data analysis points towards a null effect, which we attribute mainly to flawed design elements and conclude that these need to be catered for before a conclusion can be made.

Furthermore, a simulatory approach will be proposed, which affords the visual investigation of performance of a simulated system informed by a particular set of DDM parameters. This was applied to the design and yielded valuable insights on the observed null effect, as well as on avenues for optimization.