An idea to invert volume conduction in EEG

Abstract

Volume conduction is a long-standing problem in EEG acquisition, which describes the temporal and spatial distortion of neural signal as it passes through liquid, tissue, bone and skin before being recorded on the scalp. Such deterioration of signal quality makes inference on the location of its sources a challenging task. Moreover, some share of the underlying dynamics is irreversibly lost. In the past decades, researchers have come up with clever techniques of source separation to deconvolve the signal compounds from the measured waveform, but these remain imperfect and operate with a margin of error.

De-smearing offers an approach to inverse the effects of volume conduction in EEG signal by establishing a mapping from extracranial to intracranial sensors with a clinically relevant level of accuracy.

In principle, it affords a transform of scalp EEG time series to a quality approximating the one of neural signaling as measured directly from the cortical surface – but in a noninvasive manner. This level of data quality fosters the precise spatial localization of neural generators. Being able to localize malfunctioning herds of neurons is key in clinical domains such as determining epileptic foci (cortical volume from which seizures emerge). Likewise, in research this ability is highly appreciated to approach a more fine-grained localization of functional architectures in a noninvasive manner

In a nutshell, de-smearing records the same sources from two different angles: outside and inside the skull. By means of deep learning the structural relationship between these streams of data is established. Once trained, the model will be capable of reversing volume conduction by inverting the learned mapping. Let’s take a look at the same principle but applied in a different domain: WIFI imaging.

Authors have trained a model to retrieve location and shape of a moving object just by decoding wifi signal of the room. Essentially, they turned a Wi-Fi router into a camera.

The first component of this setup was capturing deflections of Wi-Fi, an electromagnetic signal oscillating at 2.4 Ghz frequency. Like light, it bounces off objects and these deflections can be tracked. Now, the authors used video cameras to capture the position of a human in a room and parallelly tracked its Wi-Fi deflections. Subsequently, they trained a model to map the deflected signal patterns onto the shape and location of its source which was informed by camera images. Once this relation was established, visual data became redundant, as all spatial information could be reconstructed from

Wi-Fi deflections.

Take two measurements of the same source. Employ a model to find the structural connections between the observations and, once this is done, only one measurement is needed to retrieve the information in question.

In the framework of de-smearing, parallel ECoG and EEG recordings of neural activity are fed into a model. One is invasive, the other a timid mode of measurement. Make the model establish a transform from EEG to ECoG and once accomplished, the invasive one is no longer needed.

Technicalities

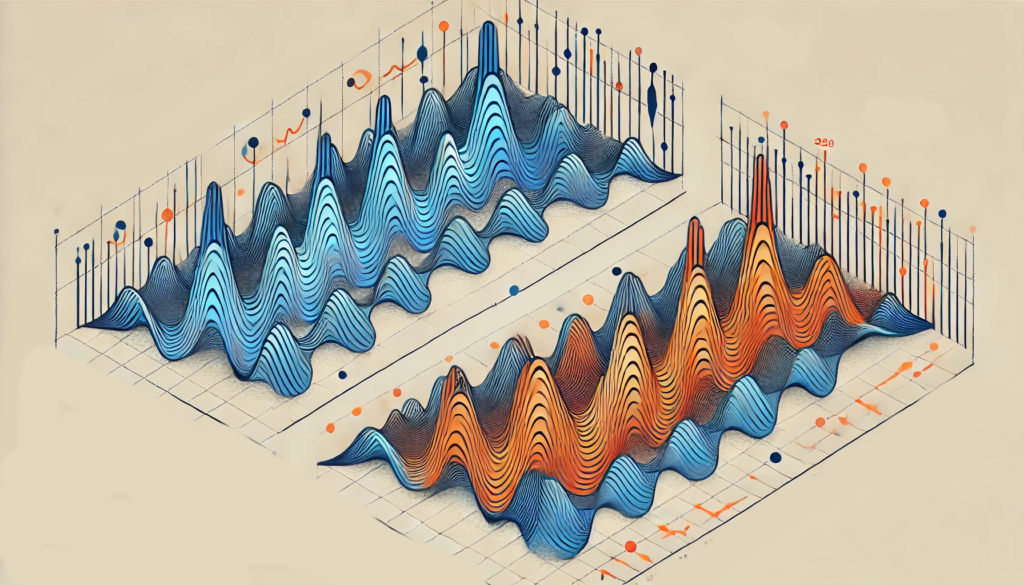

Computationally speaking, de-smearing poses a multivariate sequence-to-sequence translational problem. Therefore, encoder-decoder architectures featuring latent space projections are feasible, as they are known to handle time-series mappings well.

Seq2seq models, originally designed for tasks like language translation, have an encoder-decoder structure. The encoder processes the input sequence of multivariate data into a context vector, and the decoder generates the output sequence. These models typically utilize recurrent neural networks (RNNs) such as long LSTMs or gated recurrent units GRUs. Likewise, transformers feature this architecture, which makes them suitable for handling sequential data such as natural language.

In multivariate settings, each time step dt consists of one voltage sample of k electrodes. The encoder captures the temporal dependencies and relationships among these variables, transforming the input sequence into a latent space representation. This latent space, a lower-dimensional representation of the input data, is crucial for efficiently capturing the underlying structure and dependencies in the data.

The decoder then takes this latent space representation and generates the desired output sequence, maintaining the dependencies learned from the input. Attention mechanisms can be integrated to constraint the decoder on relevant windows of the input sequence, enhancing performance, especially with long sequences.

Challenges

Alike its promising nature, this concept does not lack challenges. An obvious challenge involves data acquisition. ECoG is most commonly used intra-operatively with epileptic patients to localize the seizure onset, wherein EEG could not be recorded. In extraoperative ECoG however, patients keep the implant for a few weeks. This is the population within which parallel recordings would be possible. Due to the risk involved in this procedure, it is only applied in serious clinical cases and therefore this pathology bias might become problematic in the mapping.

A typical ECoG features a grid consisting of 40-80 electrodes with 5-10mm of inter-electrode distance. REF. This is far denser than EEG caps which feature 20-30mm spacing between electrodes (considering a 128 channel montage). This circumstance hints at a convolutional relation from ECoG to EEG.

Data-wise, there will be a large deterioration in SNR moving from ECoG to EEG by the dreaded volume conduction. Additionally, the ECoG components are characterized by much larger amplitudes – leading to the question of meaningful scale aggregation.

Most importantly, generalizability poses a large question mark. Even when an accurate mapping is established for one patient, its generalizability is assumed to be very challenging.

Several factors play a role, such as physiological factors of the skull and the meninges. For single subject generalizability, the thickness variance across the skull is a relevant factor. This could be accounted for by an MRI scan which extracts a skull-wide thickness index so the model can control for it. Moreover, artery placements is a relevant factor to consider.

The upside is that the nonlinearities of neural firing shouldn’t pose an issue, as their disentanglement is not the target of mapping. Rather, desmearing takes them as ground truth and only aims to retrieve the signal distortion occurring from the process of passing through the matter between its generators and the surface electrodes.

Closing thoughts

An obvious next step is to push the model to real-time applications and explore its implementations in brain-computer-interfaces. BCIs operate by mapping neural activity onto an operation which is then executed by a machine. This field also profits form desmeared input signal.

Leave a Reply